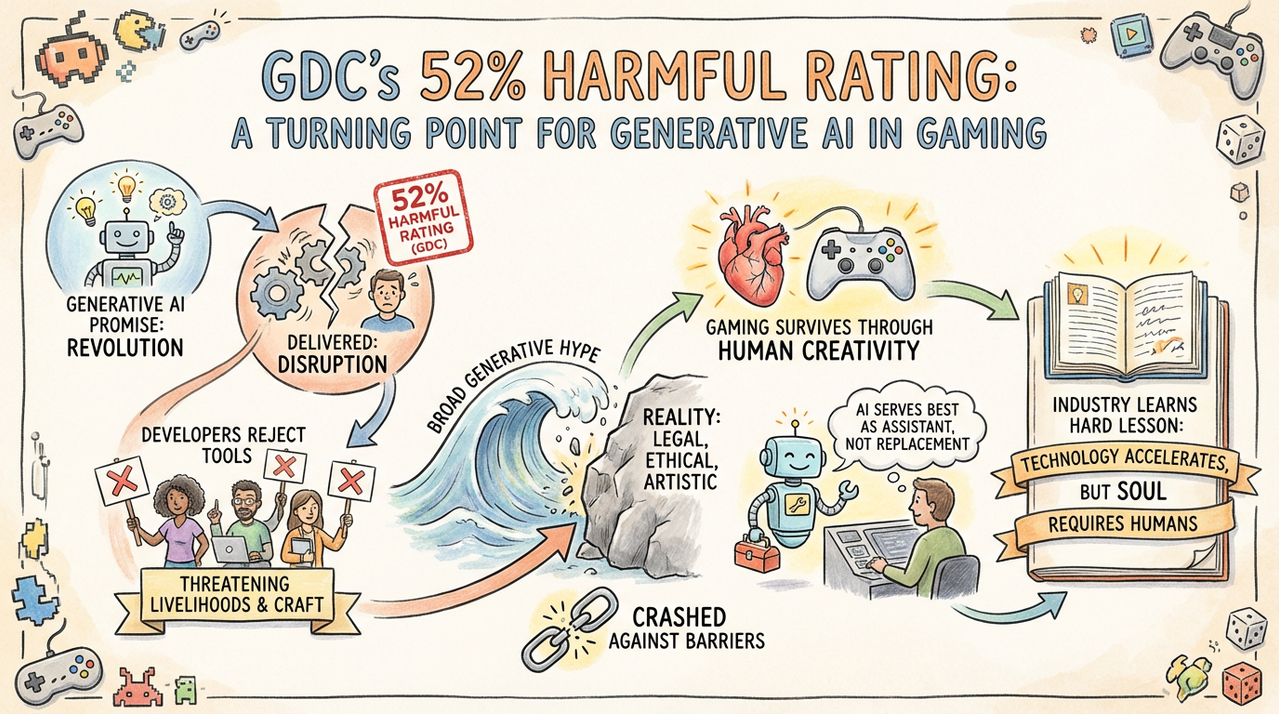

The gaming industry’s relationship with generative AI has soured dramatically. GDC’s latest State of the Game Industry survey shows 52% of developers now view AI tools as actively harmful to the sector, a sharp increase from earlier mixed feelings. Ethical concerns dominate, with creators citing job displacement, intellectual property violations, and erosion of artistic integrity as AI hype collides with development reality.

Just two years ago, AI promised revolution – dynamic NPCs, automated asset creation, accelerated prototyping. Today, developers call it a threat. The survey captures widespread frustration after years of studio experimentation yielded underwhelming results and fueled layoffs. As tools like Midjourney, Stable Diffusion, and ChatGPT flood workflows, backlash grows louder from those who built careers training the models.

52% Say AI Harms Industry

GDC’s numbers tell a clear story. In 2024, 49% of studios used generative AI, mostly for marketing and business tasks. Fast forward to 2026: 52% of developers actively believe these tools damage the industry. Indie devs express strongest opposition (58%), while AAA studios show slightly less hostility (45%) – likely reflecting bigger budgets for AI experimentation.

Primary complaints focus on three areas:

– Job losses as AI replaces junior artists, writers, QA testers

– Copyright violations from models trained on scraped game assets

– Quality decline as AI-generated content lacks human soul

From Promise to Backlash

2024’s GDC buzzed with AI optimism. Ubisoft demoed Neo NPCs with dynamic conversations. Tencent unveiled GiiNEX for 3D city generation. Epic, AWS, Autodesk promised workflow revolutions. Developers tested runtime AI for smarter enemies, procedural worlds, automated dialogue.

Reality proved colder. AI-generated textures needed constant human cleanup. ChatGPT dialogue sounded generic. Procedural levels lacked coherent design. Most critically, AI didn’t deliver promised time savings – projects still missed deadlines while junior roles vanished. Studios that bet big on AI faced same crunch pressures, now with demoralized teams.

| Year | AI Usage | View as Harmful | Main Concerns |

|---|---|---|---|

| 2024 | 49% studios | 26% developers | Ethics, job fears |

| 2025 | 62% studios | 38% developers | Quality, IP theft |

| 2026 | 71% studios | 52% developers | Layoffs, creativity |

Job Loss Fears Materialize

Developers watched AI promises become layoffs. Unity’s 2024 policy flip-flop cost hundreds of jobs. Epic shuttered AI division after failed experiments. Mid-sized studios replaced concept artists with Midjourney prompts. GDC data confirms correlation: studios heavy AI adopters cut 28% more staff than peers.

Junior roles vanished first. Environment artists, 2D illustrators, narrative designers – exactly those whose portfolios trained public AI models. Mid-career devs now fear AI handles repetitive tasks, leaving only elite creatives. Survey reveals 67% worry about personal job security, up 22 points from 2024.

IP Theft Nightmare

Copyright battles escalated. 2025 lawsuits from artists against Stability AI, Midjourney revealed training data scraped from DeviantArt, ArtStation, even private Discord servers. Game studios discovered AI models memorized their assets – generate ‘Skyrim texture pack’ and recognizable Bethesda rocks appeared.

AAA publishers face dilemma. Public AI tools risk lawsuits. Private models require massive clean datasets. Indies can’t afford either. Result: creative paralysis. Survey shows 43% of devs avoid AI entirely due to legal fears, creating two-tier system where only biggest studios experiment safely.

Quality Over Hype

AI promised acceleration, delivered mediocrity. Generated concept art looked samey. Procedural dialogue repeated cliches. Auto-animated characters moved stiffly. Developers spent more time fixing AI output than creating manually. ‘AI washing’ became industry joke – studios hyping minimal AI use for investor brownie points.

Success stories exist but rare. Larian used AI for minor NPC chatter cleanup. Supergiant automated repetitive sound effects. These augment rather than replace humans. Most AI applications failed core test: does generated content excite players? Answer increasingly ‘no.’

Indie vs AAA Divide

Studio size predicts AI stance. Indies reject tools (58% harmful) protecting hand-crafted identities. AAA cautiously experiment (45% harmful), chasing investor ROI. Mobile/free-to-play studios embrace AI most aggressively (only 32% harmful), prioritizing speed over artistry.

This fracture worries industry veterans. Successful games – Baldur’s Gate 3, Hades 2, Helldivers 2 – succeeded through human craft, not AI. As tools proliferate, risk grows of homogenized aesthetics drowning unique visions. Survey warns ‘loss of diversity in visual styles.’

Ethical Concerns Dominate

84% express ethical worries, unchanged since 2024. Top issues:

– Uncompensated training data from working artists

– Environmental cost of AI compute

– Devaluation of human creative labor

– Potential for AI propaganda/misinformation in games

Layoff connection strongest trigger. Developers watched AI promises justify 2024-2025 bloodbaths. Microsoft, Sony, EA, Warner Bros all cited ‘AI efficiencies’ during cuts. Trust broken – creators now see tools as threat, not savior.

Future Outlook

GDC predicts mixed path. Narrow AI (pathfinding, animation cleanup) gains acceptance. Generative tools face resistance unless:

– Transparent training data sources

– Revenue sharing with original creators

– Legal frameworks protecting artists

– Demonstrable quality improvements

Optimists point to successes: procedural generation refined decades ago, machine learning companions in single-player games. Pessimists fear AI commoditizes creativity, leaving only elite studios with human touch. Survey leans pessimistic – 52% harmful rating signals turning point.

FAQs

Why do 52% of developers hate generative AI?

Job losses, IP theft fears, poor quality output, ethical concerns about scraped training data. Developers spent years creating content that trained AI models now replacing them.

Do studios still use AI despite backlash?

Yes – 71% of studios experiment, mostly AAA and mobile. Indies resist most strongly. Usage up despite growing developer hostility.

Has AI actually improved game quality?

Rare successes exist (minor automation), but most applications create more cleanup work than they save. Players notice generic AI content.

Will AI replace game artists?

Not elite creatives, but junior roles already declining. Mid-career artists fear commoditization. Biggest studios can afford human polish atop AI foundations.

What about AI in shipped games?

Runtime AI (smarter NPCs, procedural dialogue) remains experimental. Ubisoft’s Neo NPCs showed promise but years from viability. Narrow AI (pathfinding) accepted universally.

Can AI solve crunch?

No evidence yet. Projects using heavy AI still miss deadlines. Tools create new QA/revision workloads offsetting gains.

Legal situation for AI art?

Chaos. 2025 lawsuits established artists can sue for unauthorized training data. No clear precedent. Studios avoid public AI tools.

Best AI success stories?

Larian Studios (NPC cleanup), Supergiant (sound effects), procedural generation refinements. All augment rather than replace humans.

Conclusion

GDC’s 52% harmful rating marks turning point. Generative AI promised revolution, delivered disruption. Developers reject tools threatening livelihoods and craft. While narrow applications persist, broad generative hype crashed against reality – legal, ethical, artistic. Gaming survives through human creativity; AI serves best as assistant, not replacement. Industry learns hard lesson: technology accelerates, but soul requires humans.